Overview

Scheduling midi events is not as straightforward as one might think. We have to work with samples and sample rates rather than strictly with time (e.g., in 3 seconds do this, then stop in 2 seconds). There's some confusing nomenclature and there are some midi gotchas that we have to be mindful of. But since all my plugins are heavily midi focused, it's a topic that I genuinely like discussing and figuring out.

Prerequisites

You should understand basic VST3 architecture. It's also useful to know Processor-Controller communication strategies. If you aren't up to scratch, then please check out these posts:

- https://nyxfx.dev/vst3-controller/

- https://nyxfx.dev/vst3-controller-createview/

- https://nyxfx.dev/vst3-controller-processor-communication-strategies/

Scenario

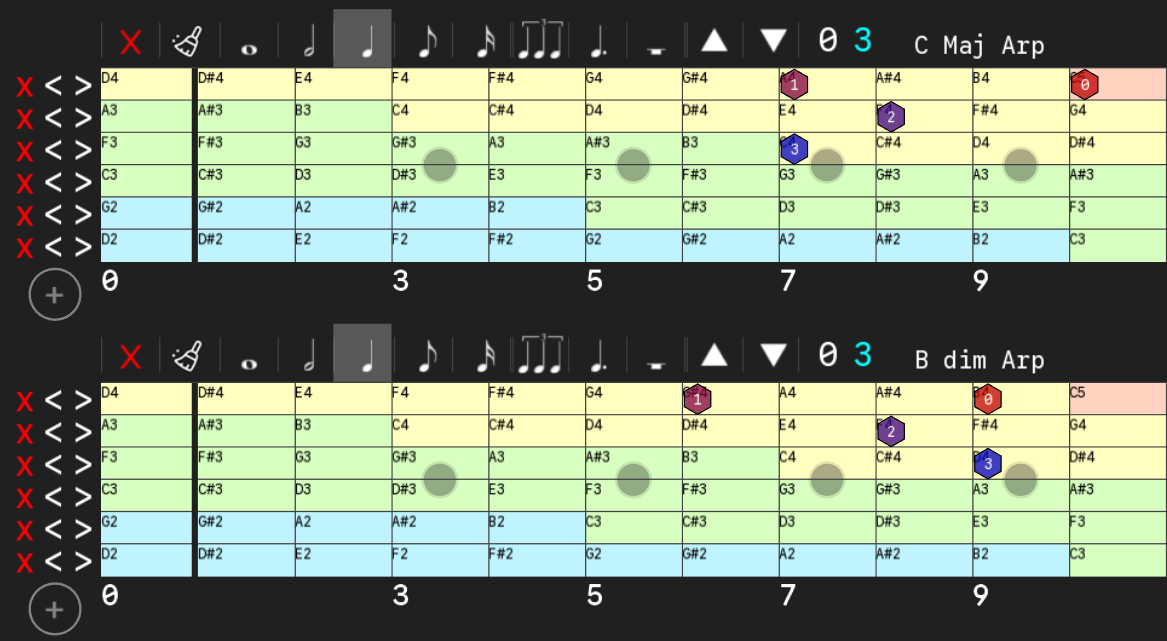

That's a screenshot of an upcoming plugin I've been working on that allows users to schedule midi events. At the moment, we have 8 quarter notes in total and in sequential order (0 - 3 on top and 0 - 3 on bottom).

There are two different timing systems in motion here:

- The Controller defines musical intent via quarter notes, half notes, eighth notes, etc. but there is no intrinsic value associated with those

- The Processor ties the musical intent to a sample-accurate schedule

In order to achieve this, we need to utilize a system that both sides can understand.

Neutral-based timing system

The entire scheduling system is expressed in quarter notes. We're going to create two structs to help us manage life between the Controller and Processor that only speak the language of quarter notes.

struct MidiDuration_t

{

// denominator is the note value: 4 = quarter, 8 = eighth, 2 = half

uint8_t denominator { 4 }; // 1, 2, 4, 8, 16

bool dotted { false };

bool triplet { false };

};

struct Midi_t

{

// the following items are all user-specified ones

uint8_t note { 0 };

uint8_t channel { 0 };

uint8_t velocity { 0 };

MidiDuration_t duration;

// we derive these

uint32_t noteId { 0 };

double qnStart { 0.f };

double qnEnd { 0.f };

};

The Controller

We want the Controller to manage the timeline for us. Even though the Controller doesn't know the BPM or sample rate, we can provide a neutral duration value by defining what a quarter note is:

double getDurationInQN( const MidiDuration_t & d )

{

double qn = 4.f / d.denominator; // it's that easy

if ( d.dotted ) qn *= 1.5;

if ( d.triplet ) qn *= 2.f / 3.f;

return qn;

}

Now we can apply that to our Midi_t:

double currentQN = 0;

for ( auto& midiEvent : midiEvents )

{

midiEvent.qnStart = currentQN;

// for simplicity, we assume all notes are in sequential order

currentQN += getDurationInQN( midiEvent.duration );

midiEvent.qnEnd = currentQN;

}

Let's assume that our timeline is just: std::vector< Midi_t > and the notes are in sequential order.

The Maths & Scheduling by Hand

Before getting lost in Processor code, let's analyze a real-world scenario so we're all on the same page. Let's assume the following:

| Variable | Value |

|---|---|

| Sample Rate | 48,000 samples/sec |

| Sample Size | 256 samples |

| BPM | 120 bpm |

| Scheduled Midi Notes | 4 sequential quarter notes |

| C4, A4, F4, C3 | |

| Midi Channel | Channel 1 |

The BPM is what turns musical intent into something actionable. But the BPM is quarter notes per minute and we need it per second.

| Derived Variables | Formula | Value |

|---|---|---|

| Quarter Notes per second (QNS) | BPM / 60 | 2 QNS |

| Seconds per Quarter Note | 1 / QNS | 1 / 2 sec |

| Samples per Quarter Note | SecondsPerQuarterNote * SampleRate | 24,000 samples |

| Seconds per block | sampleSize / sampleRate | 0.00533 sec |

| Quarter Notes per Block | SecondsPerSample * QNS | 0.01066 QN/block |

| QN per Sample | (BPM / 60) / sampleRate | 0.0000416667 |

This means that each process block advances the project timeline by ~0.01066 quarter notes @ 120 BPM. As you can see, a block covers a very small slice of time.

If we look at our midi note schedule, then according to our Controller, the Processor should receive the following information:

| Note | QN Start | QN End |

|---|---|---|

| C4 | 0.0 | 1.0 |

| A4 | 1.0 | 2.0 |

| F4 | 2.0 | 3.0 |

| C3 | 3.0 | 4.0 |

Processor Process Block 1:

- qnStartRange = projectTimeMusic = 0.0 QN

- qnEndRange = qnStartRange + quarterNotesPerBlock = 0.0 QN + 0.01066 QN

- is C4 within 0.0 - 0.01066? Yes

- Schedule the midi on event for C4

- Does C4 end before the end the block?

- Clearly not, because 0.01066 is way less than 1.

- How many blocks until C4 expires?

- 1 / 0.01066 = 93.75 blocks, so 94 process() calls to go

The Processor Isn't Aware

The Processor doesn't know "where it is" in samples globally. It only knows "where it is" musically and how large the current block is. All timing decisions must be expressed relative to the current block.

The Processor intentionally only gives us musical position in quarter notes and the block size rather than an index number or block number.

That means for every subsequent process() block we need to know the following:

- Did the user change the playhead by forwarding, rewinding, looping, stopping?

- Do we need to trigger a midi-on note in this block?

- Is this note already on & if so then do we retrigger?

- Do we need to trigger a midi-off note?

- If so, then is there a corresponding on note?

- If we perform any midi events, then where in the block does it belong?

The Processor

Processor initialize() setup

First, let's force the Processor to provide us with some data:

tresult VSTProcessor::initialize(FUnknown *context)

{

// request the following items

processContextRequirements.needTransportState();

processContextRequirements.needTempo();

processContextRequirements.needTimeSignature();

processContextRequirements.needProjectTimeMusic();

}

Processor process() scheduling

The History of a Midi Event

We MUST keep track of what notes we've triggered. I'm not going to get into the techniques of that in this post, but I will define a really simple API for us to use.

class IMidiNoteTrackable

{

public:

// adds this to our history, i.e., it's "ON" now

virtual void addOnEvent( const Midi_t & midi ) = 0;

// explicitly removes a midi note out of its expiration order

virtual void addOffEvent( const Midi_t & midi ) = 0;

// returns nullptr if no notes expire within this block

// in production, i'd use std::optional<> instead

virtual const Midi_t * getNextExpired(

const double qnStart,

const double qnEnd ) = 0;

// is this note active?

virtual bool isMidiActive( const Midi_t & midi ) = 0;

// must return a unique ID for the lifetime of an active note

// we use this for Midi_t::noteId, which gets consumed by the

// VST3 event buffer

virtual uint32_t getNextUniqueId() const = 0;

};

Scheduling sampleOffset

A sampleOffset only matters to the current block. If your block size is 256, then your sampleOffset can only be 0-255. We don't schedule in the future using sampleOffset. We only find out where in the block we need to trigger events that belong to this block.

So, once we know a MIDI event belongs in the current block, we still need to emit it at the correct sample offset.

Because the host only accepts offsets relative to the current block, we compute the offset by projecting the event's quarter-note position into the block's sample range.

int32_t getSampleOffset( double qnEvent,

double qnBlockStart,

double qnPerSample,

int32_t blockSize )

{

const auto qnIntoBlock = qnEvent - qnBlockStart;

const auto offset = static_cast< int32_t >( qnIntoBlock / qnPerSample );

return std::clamp( offset, 0, blockSize - 1 );

}

Let's Schedule!

Why this is split into two passes

We process note-off before note-on events to ensure correct ordering when multiple events land in the same block. This prevents cases where a note-on and note-off share a block but are emitted in the wrong order due to iteration sequence.

tresult VSTProcessor::process( Vst::ProcessData &data )

{

// ... error-checking elided...

// tempo is beats (i.e., quarter notes) per minute (BPM)

// tempo / 60 = beats per second

// beats per second / sampleRate = QN per sample

const auto qnPerSample =

( data.processContext->tempo / 60.0 ) / data.processSetup.sampleRate;

// projectTimeMusic is in quarter notes already &

// NOT samples or seconds

const auto qnStartRange = data.processContext->projectTimeMusic;

const auto qnEndRange = qnStartRange + data.numSamples * qnPerSample;

// first, turn off any expired midi events

while ( const auto * expired =

m_trackable.getNextExpired( qnStartRange, qnEndRange ) )

{

// find out where in this block we need to turn it off

const auto offOffset = getSampleOffset(

expired->qnEnd,

qnStartRange,

qnPerSample,

data.numSamples );

emitOffNote( data, *expired, offOffset );

}

// second, schedule events

for ( auto & midiEvent : midiEvents )

{

// quick reject: this note doesn't intersect this block at all

if ( midiEvent.qnEnd <= qnStartRange ||

midiEvent.qnStart >= qnEndRange ) continue;

// Midi Event ON occurs in this block

if ( midiEvent.qnStart >= qnStartRange &&

midiEvent.qnStart < qnEndRange )

{

// Prevent re-triggering if we already sent ON

if ( !m_trackable.isMidiActive( midiEvent ) )

{

const auto onOffset = getSampleOffset(

midiEvent.qnStart,

qnStartRange,

qnPerSample,

data.numSamples );

// set the note id. we'll need this to match on

// on and off events

midiEvent.noteId = m_trackable.getNextUniqueId();

emitOnNote( data, midiEvent, onOffset );

m_trackable.addOnEvent( midiEvent );

}

// Midi Event OFF also occurs in this block (short notes)

if ( midiEvent.qnEnd > qnStartRange &&

midiEvent.qnEnd <= qnEndRange )

{

const auto offOffset = getSampleOffset(

midiEvent.qnEnd,

qnStartRange,

qnPerSample,

data.numSamples );

emitOffNote( data, midiEvent, offOffset );

m_trackable.addOffEvent( midiEvent );

}

// else: OFF will be emitted later via getNextExpired()

}

}

return Steinberg::kResultOk;

}

Emitting the Notes

void emitOnNote( Steinberg::Vst::ProcessData & data,

const Midi_t & midi,

int32_t sampleOffset )

{

Steinberg::Vst::Event e {};

e.type = Steinberg::Vst::Event::kNoteOnEvent;

e.sampleOffset = sampleOffset;

e.noteOn.channel = ev.channel;

e.noteOn.pitch = ev.pitch;

// we use uint8_t to save space for communication

// but the Event expects a value from 0.f - 1.f

e.noteOn.velocity = static_cast< float >( ev.velocity ) / 127.f;

e.noteOn.noteId = midi.noteId;

// now add the event to the outbound event buffer

if ( data.outputEvents->addEvent( e ) != Steinberg::kResultOk )

{

// TODO: in production, you'll want to handle this

}

}

The off looks almost identical to this, so I'm not going to add it.

Conclusion

As you can see, scheduling Midi is not so straightforward. All my plugins utilize Midi extensively, so this is a subject that I've had to really get comfortable with. In the end, once you get a solid Midi Note Tracking system in place, you'll find yourself reusing it often. So it's mostly a "build once, reuse always" type of deal.