These preshaders evolve over time, especially as I integrate them into existing effects or add new ones. The architecture remains unchanged. At the bottom of this blog is an ongoing update, indicating changes.

The Problem

In the nyx.vfx plugin the Shader stage has traditionally been a linear handoff chain, where each shader consumes the texture output from the previous one:

Particles -> Mesh -> sceneTexture (textureA)

textureA -> Shader1 -> textureB

textureB -> Shader2 -> textureC

…

Elegant in theory. Painful & limiting in practice.

After 2–3 shader passes, the pipeline has mutated the original scene so aggressively that downstream effects (e.g., feedback, blur, smearing, and motion-driven warps) no longer have reliable truth to sample from. The result is a stack of shaders making decisions based on increasingly corrupted textures.

What we actually need:

- A stable scene texture containing particle + mesh output

- Auxiliary per-frame fields that preserve spatial and temporal meaning

- A way for shaders to sample those fields without recomputing them

- All of this within a hard 1/60s (~16ms) frame budget per render channel

What Are PreShaders?

A PreShader is a lightweight rendering pass that produces a minimal texture (aka auxiliary texture) containing just one kind of useful data, derived after Mesh rendering but before the Shader stage of plugin's pipeline.

They solve three core issues:

-

Preserving reference truth

They expose stable textures any shader can sample, such as density, motion, or masks. -

Efficient computation

They render only what's needed into render targets (RTs) instead of processing the full frame buffer. -

Controlled blending flexibility

They let you mix raw scene data, shader composites, and original source context using a unified mask system.

Core PreShader Types

| PreShader | Captures | Purpose |

|---|---|---|

DensityFieldPreShader |

Energy-weighted particle positions | "Where are particles?" |

LineDensityPreShader |

Energy-weighted line positions | "Where is mesh?" |

BrightPassPreShader |

Thresholded luminance bands | "Where is activity bright?" |

VelocityPreShader |

Signed motion vectors (RG = velocity.xy) |

"Where are particles going?" |

Rules of thumb:

- One field = one PreShader

- Immutable, single-purpose

- Computed once per frame

- Scoped to a Channel pipeline

Design Choices

Two possible architectures:

Choice A: Shared Per-Channel PreShader Pipeline

Particles/Mesh -> sceneTexture

sceneTexture -> PreShaderPipeline -> { density, mask, brightPass, velocity }

Shaders sample scene + aux fields -> final texture

Pros

- Predictable, centralized, easy to reason about

- Aux textures generated once per frame per channel

- Field reuse across multiple shader effects

- Temporal fields like accumulation/feedback are stable and correct

- Debuggable by visualizing aux RTs independently

Cons

- Per-shader specialization requires opt-in toggles (acceptable trade-off)

- Shared PreShader settings for all Shaders (less flexibility)

Choice B: Each Shader Builds Its Own PrePasses

sceneTexture -> ShaderA builds PrePasses -> textureB

textureB -> ShaderB builds PrePasses -> textureC

Pros

- Encapsulated, effect-specific RT generation

Cons

- Massive redundant GPU work if multiple shaders need the same fields

- Field inconsistency depending on shader ordering

- Temporal accumulation correctness becomes fragile

- Debugging fields is painful because RTs are hidden inside effects

- Shaders stop being post passes and start acting like mini pipelines

Design Decision: Choice A

- The engine supports "limitless" stacked shaders

- Most effects will sample the same auxiliary fields

- Temporal PreShader fields (like feedback/smear) must update once per frame, not per shader

- The modest loss of per-shader granularity is worth the stability and performance gains

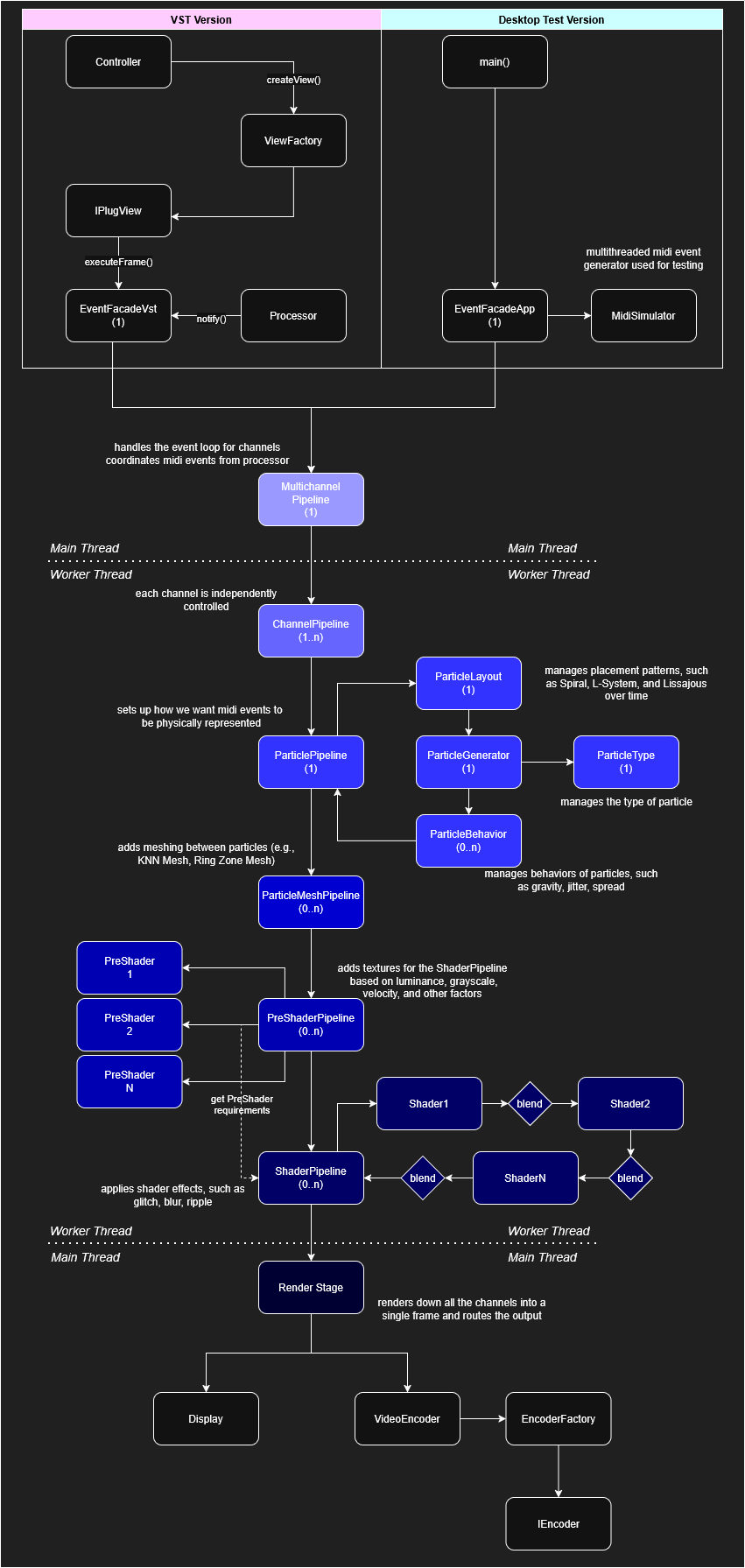

Architecture Update

Integration Concept

Dependency resolution happens through a bitmask, queried by the ShaderPipeline:

enum class E_PreShaderType : uint8_t

{

Density = 1,

LineDensity = 2,

BrightPass= 4,

Velocity = 8

};

using AuxMask = uint8_t;

class MyCustomShader final : public IShader

{

// all the shaders identify which PreShaders they need access to

AuxMask requiredAux() const override

{

// some shaders will only use one or two (if any)

return static_cast<AuxMask>(E_PreShaderType::Density) |

static_cast<AuxMask>(E_PreShaderType::LineDensity) |

static_cast<AuxMask>(E_PreShaderType::Velocity) |

static_cast<AuxMask>(E_PreShaderType::BrightPass);

}

};

The ChannelPipeline orchestrates everything without PreShader/Shader stages talking directly:

m_preShaderPipeline.apply(

inputs, // all particles, meshes, and original texture

m_preShaderOutputs, // PreShader textures to be created

m_shaderPipeline.getAuxMask()); // PreShader textures needed for its active shaders

This keeps the architecture clean and ensures real-time predictability.

Use Case: Noise Warp

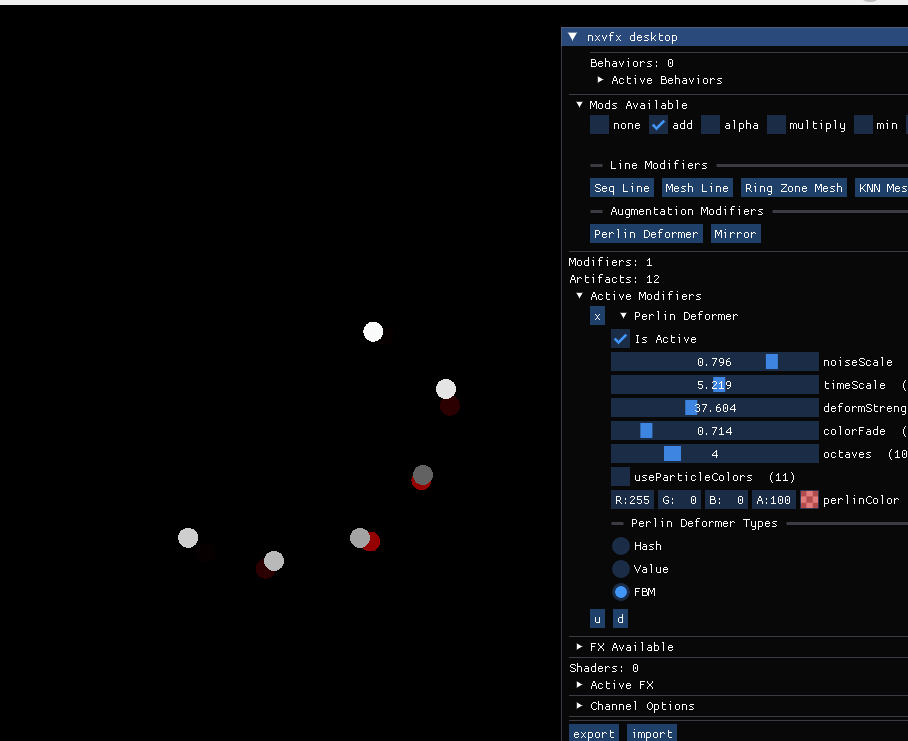

Old Perlin deformers used particle spam for noise (tragically goofy):

The solution was to create a Shader to generate noise called NoiseWarpShader that could take advantage of the PreShaders. The videos show how far along nyx.vfx has come.

You now have a mental image of what “correct noise + correct architecture” can buy you.

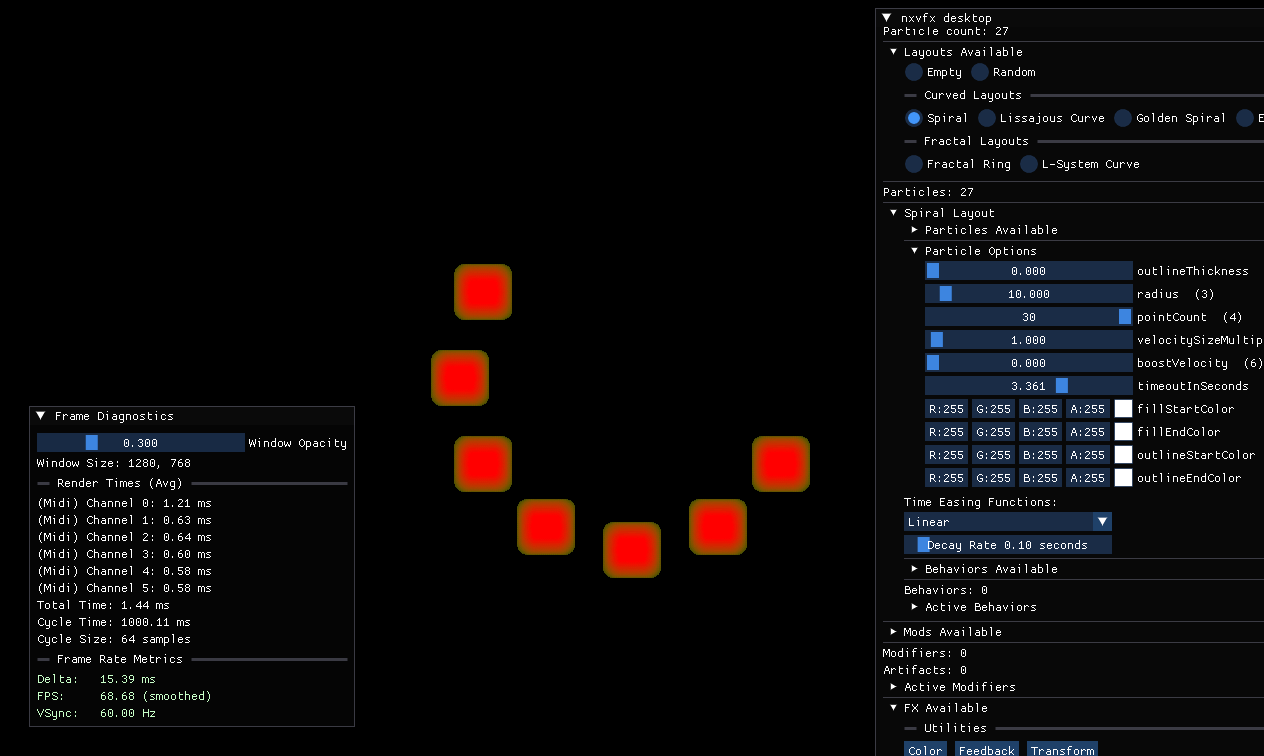

Use Case: Density Heat Map

The old density heat map relied heavily on blurs and it never came out looking right:

And the current polished version (DensityHeatMap + PreShaders only):

Evolution

This is where I append changes to what PreShaders do as I continue to integrate them into new and existing effects.

2026-01-20

- Line Masking (i.e., a line gating technique that created a windshield-wiper effect) has been removed

- Line Field Density has replaced Line Masking, which is the same as Density Field. This allows users to manage Lines & Particles the same way or independent for energy

- Historical records have been significantly increased (from 8 to 128). That creates a really blurring effect out of the box that lends itself to many of the effects